Epistemic status: This is mostly pure speculation, although grounded in many years of studying neuroscience and AI. Almost certainly, much of this picture will be wrong in the details, although hopefully roughly correct ‘in spirit’.

In neuroscience, there’s such a large amount of different brain regions and confusing terminology that it’s really hard to get a general overview of how the brain systems function and how the various pieces fit together. It is also very easy to study each individual piece in isolation without considering the global picture of how all the different brain regions fit together to produce a working system. Here, I’m going to try to provide a narrative and high level schematic, of and how and why the general structure of the brain evolved the way it did and how all the different regions functionally fit together. Specifically, what we want to build up is the high-level API of different fundamental brain regions, as well as an understanding of how such a complex system could have been built, piece by piece, by evolution.

To begin, we need a basic understanding of the three major components of the brain.

The first, and evolutionarily oldest, is the brainstem which technically comprises the pons,and the medulla and is the bit which sits closest to your spinal cord and is where basic functions such as breathing and heartbeats are controlled. It contains nuclei which implement the visceral reflexes necessary for survival such as breathing, your heartbeat, and your circadian rhythm. It also contains nuclei which control general emotional state and key neurotransmittors. For instance, serotonin and norepinephrine (adrenalin) are secreted from nuclei in the brainstem (dopamine is secreted from nuclei in the midbrain – this will be important later as it suggests that ‘reward’ is a later evolutionary addition). The brainstem also possesses quite a sophisticated amount of sensory information processing, especially related to the physiological and somatosensory state of the body and possesses basic circuitry to flexibly modulate behaviour depending on physiological state.

Second is the mid-brain, which sits at the ‘base’ of the brain just above the brainstem. It houses the basal ganglia, amygdala, tectum and hypothalamus, and in general houses the basic reward sensitive region of the brain, as well as areas involved in essential emotion and conditioning, governs fight or flight responses, as well as handling more complex homeostatic processing (in the hypothalamus) as well as a lot of motor behaviour. The midbrain also contains its own sensory processing systems for olfaction (olfactory bulb), vision (superior colliculus) in the tectum, and audition (inferior colliculus). The midbrain also contains the hippocampus and septal area which is key for long term memory formation.

Finally, there is the cortex which forms the `outer wrapper’ of the brain and provides complex sensory information processing (all senses have their own cortical regions dedicated to them) as well as its own motor processing system (the motor cortex) as well as providing support for complex planning and decision making and (probably) more complex emotional regulation. Cortex is traditionally considered the seat of higher intelligence, and appears to be the basis for most cognitive capabilities such as planning, metacognition etc.

However, clearly, evolution did not start out with a fully formed brain. It was slowly built up piece by piece over the course of evolution. At each stage the brain itself must have been functional and useful to the organism. This evolutionary history places constraints on possible brain designs. Thinking through what these constraints mean can also shed light on the current functionality of the brain and aid in understanding its modular structure.

Another perspective which I think is strongly underappreciated in neuroscience is that of API design, especially for a distributed system. The brain is fundamentally composed of many different specialized units, each with specific inputs and outputs, which come together to form a highly distributed and fault tolerant system. We have a lot of experience in both the challenges and techniques necessary to build such systems in computer science, while these sorts of issues are barely thought of in neuroscience. Similarly, hardly any attention in neuroscience is paid to the fundamental problem of interfaces in the brain. The brain possesses many different modules which each use their own internal complex representations and which need to communicate effectively with each other, sometimes over channels with fairly limited bandwidth. Moreover, the complexity of the representations can often differ substantially as when the cortex communicates directly with brainstem or spinal cord circuits. There is thus a fundamental translation problem in the brain – how can different regions learn to communicate their internal representations with each other efficiently. This is especially important between subcortical regions and on the cortical-subcortical interface where it seems unlikely to be possible to perform end-to-end credit assignment with a backprop-like algorithm.

The purpose of this post is to present a high level speculative account of how all the various brain regions have developed over evolutionary time, what the interfaces and APIs are, and how the different regions of the brain form an extensible and modular distributed system. The goal is to break down how the brain’s structure could have evolved and accreted new regions and functionality in a continually viable way during evolution. I warn again, though, that this is highly speculative, high-level, and probably wrong in many details. This story also gives us an intuition for the high level modular structure of the brain as well as why it has to be the way it is.

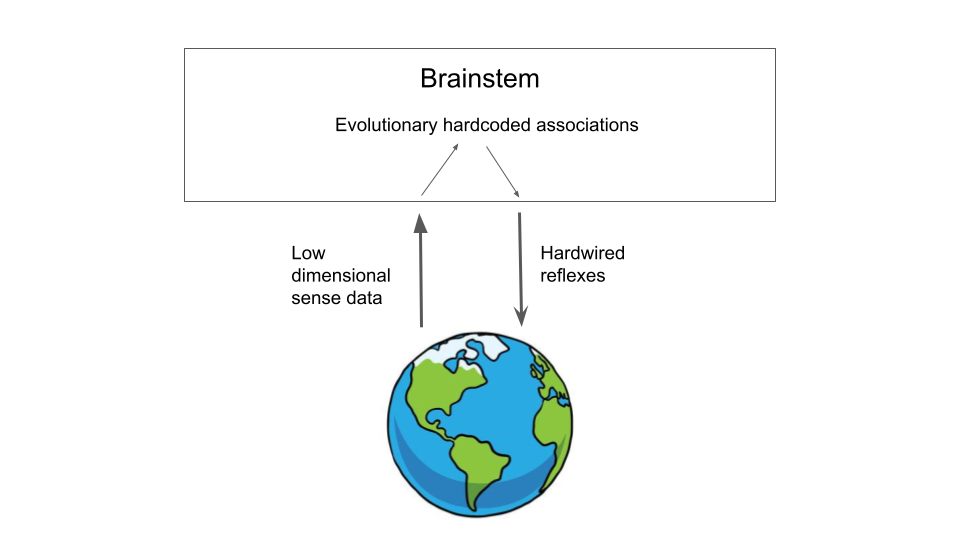

To begin, we start with the simplest possible creature with the simplest possible brain. Suppose you are a basic pre-Cambrian worm surviving in the sediment. You have a pretty simple life. A small creature, you wiggle around mostly randomly foraging for food. If you touched something, you would explore it and see if it is food. If it is, you would try to eat it. If you sense food at a distance, via smell or chemoception, you would move towards it. If something unknown touched you, you would try to move away. Otherwise, you mostly move randomly, likely in a levy-flight distribution for ideal foraging. These are the sorts of behaviours that the earliest brains evolved to handle, as well as the regulation of various internal homeostatic feedback loops. In fact, this kind of stimulus-response loops can be seen in nematode worms like C-elegans where they are carefully choreographed by individual specialized neurons. Fascinatingly, similar loops are also implemented in chemical signalling pathways in single celled organisms! In essence, your behavioural program consists of hardwired associations and responses to relatively low dimensional sensory input – things like touch or chemoception. Evolution can hardwire these responses in because the sense-data is low dimensional enough for there to be relatively simple mappings between stimulus and correct response. At this stage of evolution, your brain looks like Figure 1:

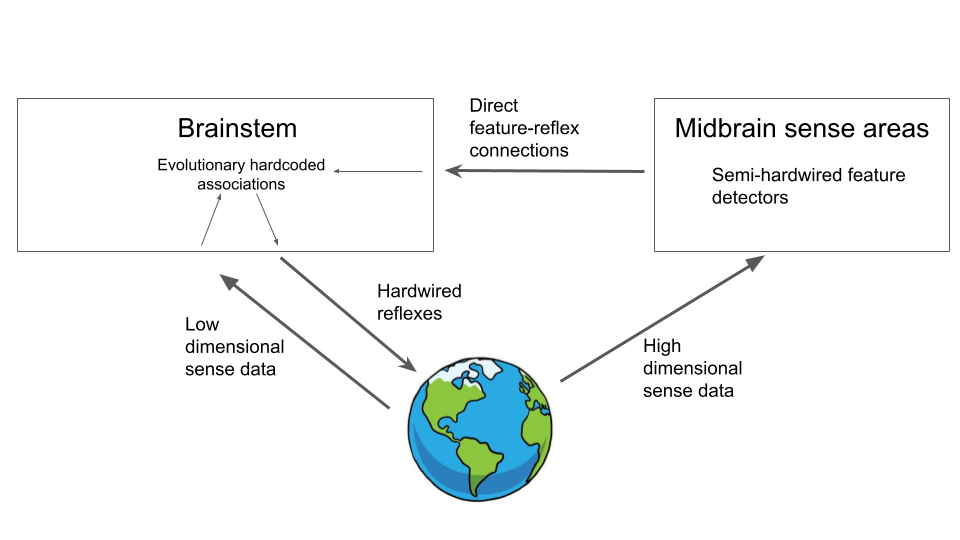

Now, suppose that many millions of years have passed and you are now a Cambrian critter. You have evolved eyes! Evolutionary power-up activated. First things first, you have the immense problem of having to interpret the huge amount of data being streamed on a millisecond by millisecond basis through your shiny new eyes. You, or rather evolution does what it can. It slowly creates a new set of brain modules, which we call the midbrain, on top of the old and learns, over countless generations, how to wire neurons together so that certain crude feature detectors are developed, which can detect ecologically relevant stimuli such as predators or prey. It even develops some simple statistical learning algorithms to remove various redundancies in the signal and to compensate for weird data artefacts in the new sensory medium. You can also learn novel feature detectors based on statistical input patterns. Nevertheless, by and by large the hardcoded feature detectors work tolerably, and you generally don’t live long enough for learning to be super effective over your lifetime. Nevertheless, even at this key stage, your brain has a problem. It has extracted these features from its sensory input, but that’s not enough. It doesn’t know what to do with them. Ideally, it would be able to take these features, and use them to make survival-relevant decisions, like whether to run away from that predator fast approaching, but how?

Well, what does your brain already have? Well developed reflex-based systems which already possess exquisitely honed abilities to fight or flee. Indeed, the entire survival repertoire is already sitting there in the brainstem, battle-tested by millions of years of evolution. Clearly, it is easiest and most efficient to just interface with that system, rather than try to evolve an entirely new parallel survival subsystem from scratch. The problem, then, becomes how to integrate this new system with the old? They speak different languages. The old, brainstem system consists of a set of reflexes from receiving certain sensory input to a hardwired response – for instance if something you, you flinch away. The new midbrain doesn’t speak this language. Instead it has a series of features. Feature 1632 is active now. That sort of thing. But what is feature 1632 anyway? The brainstem doesn’t know. Indeed neither does the midbrain. It doesn’t necessarily have a direct ‘meaning’ like a low dimensional touch signal. How can the brainstem figure out what to do with these new signals?

Luckily the brainstem does know one thing – what it computes. It knows when it flinches away. It knows when it is hungry. It knows when it is in pain. All we need to do to integrate the midbrain features into the system is to just learn a set of statistical associations between mid-brain feature activation and brainstem reflex firing. If we find that midbrain feature X tends to co-activate with brainstem reflex Y, then we can start to build an associative connection between them. In machine learning terminology, we have a supervised learning problem. Midbrain features are the input and brainstem reflexes are the targets. Since we have only a single ‘layer’ of connections this problem is easy and we can learn it with a Hebbian delta-rule. What this has given us is a way to assign brainstem-relevant meaning to the midbrain features. Our architecture now looks like this:

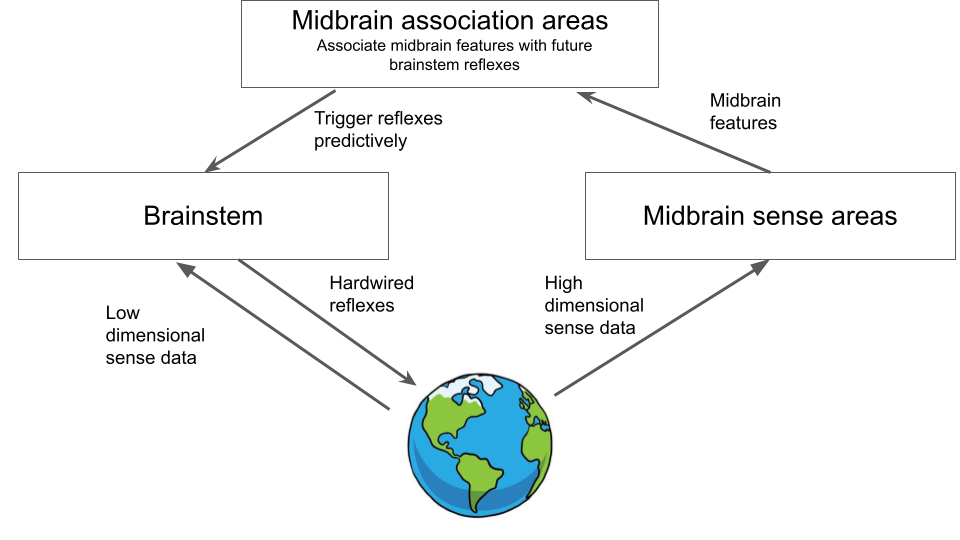

However, by itself, this isn’t yet super useful for survival. All we have done is associate certain reflexes with certain sensory features. This definitely gives us a way to interpret our high dimensional sense data, but it isn’t yet useful for survival. This is because, by definition, we are only learning features which co-activate with reflexes, so the reflexes are already happening. Where this architecture becomes useful is when we introduce a temporal element to the equation – when we learn these association backwards in time. If we can remember what features the midbrain was observing before the brainstem was activated, then we can start to gain a predictive ability. We see that feature X is typically associated with brainstem reflex Y activating in the future. We now have feature X active but not reflex Y. Given this, we can now activate Y ahead of time and respond proactively to a threat. This is the first big win. Now, we can see the predator coming towards us from far away, we have learnt that these features are associated with needing to flee in the future, so instead we can flee now, which will probably work better. If we have learnt a set of associations such that feature 1632 is commonly seen moments before the brainstem sends a signal to flee, then we just need to wire up a set of connections from that feature detection module directly to the relevant brainstem reflexes, so that feature 1632 can trigger the flee reflex directly. Another thing that this system is useful for is generalization. If we have a stimulus which is often associated with another stimulus requiring a survival based response – i.e. to approach and eat or to fight or flee, then we can form an association such that if we see the first stimulus, we can immediately activate the brainstem responses. This is essentially classical conditioning and is an extremely useful ability in the wild. If we hear a rustling in the bushes, we don’t need to wait until we see a predator leaping out at us to run, we can associate the rustling with the need to run, and then flee immediately upon hearing the rustle. In theory, we can construct very long chains of associations in this way. This gets us an organism capable of various kinds of Pavlovian conditioning.

Of course in real organisms, things are slightly more complicated but these associations are actually more beneficial, since we actually have multiple sensory modalities and physiological states and it is worthwhile associating combinations of these modalities with the eventual brainstem reflexes. Moreover, instead of having direct links learnt from each sensory modality to the brainstem, it is probably better to have a general ‘association area’ where all the sensory regions send their information which can then be associated with each other and then correlated with brainstem responses. This is essentially an ‘API gateway’ architecture which receives many disparate API requests (feature activations) and learns to route them to the right brainstem reflex. This also allows more complex multimodal evidence integration and contextual rules to develop. Instead of vision and hearing each having their own direct-to-brainstem connection, they both ping the association area, and it can choose to fire the brainstem if the combined evidence from both is sufficient or not.

Such an architecture maintains the cleanness and modularity of the API. Each sensory region just sends off its information to the association area. The association area is then the only one that communicates directly with the brainstep. We have achieved modularity and separation of concerns! In my model, the association area is the thalamus, amygdala and related circuitry, and the sensory areas would be the midbrain sensory region such as the tectum (remember, we have no cortex yet!). In most brains, the amygdala appears to perform exactly this function of simply making associations between incoming sensory features and ultimate brainstem fear responses which it can predict forward in time. Unsurprisingly, the amygdala and closely related circuitry is also the seat of classical conditioning in the brain. Moreover, learning these associations typically only requires extremely simple Hebbian learning along with eligibility traces, which can also be fast and one-shot without any complex need for deep credit assignment through time. Given this predictive learning as well as specialized association areas, our brain architecture now looks like this:

These abilities we have gained are nice, but of course we are still just using the brainstem’s response as the ground truth, so ultimately we can never do any better than the brainstem, except predict the brainstems responses before they happen. However, the brainstem can be wrong. It can react to things that are harmless, or else it can be ignorant of things that are actually extremely dangerous. The brainstem is primarily programmed directly by evolution, so it is necessarily ignorant of anything specific in the animal’s lifetime that it could learn. Also, it could be ignorant of new threats that have arisen but which evolution hasn’t had enough time to select good responses for (this becomes increasingly important as animals develop longer lifespans so that learning within lifetime has a larger payoff and evolutionary selection pressure (per unit time) becomes weaker). Can we do better than the brainstem? To do so, we need to make the shift from reacting to optimizing.

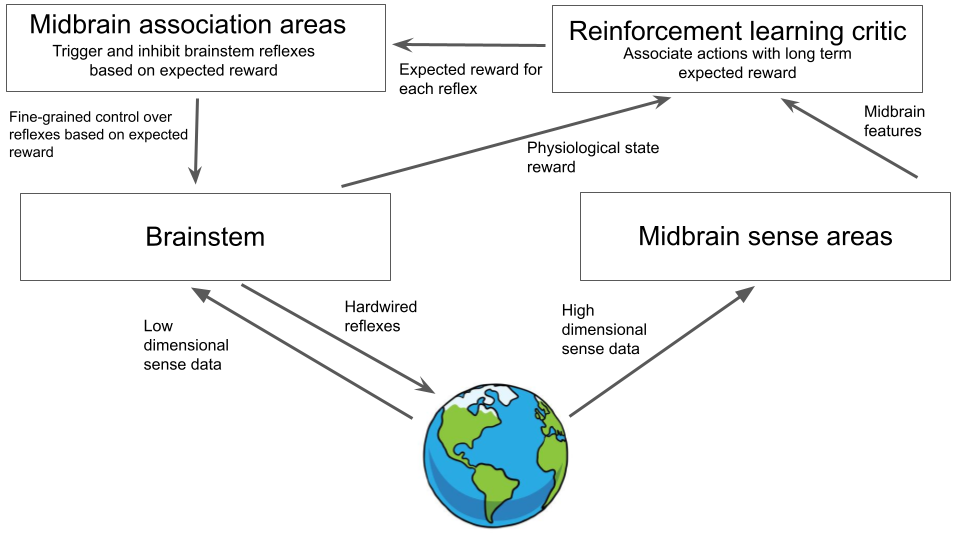

We already possess a lot of the sensory and learning apparatus needed to make better decisions. The key issue is credit assignment. We need some way to tell objectively when the brainstem’s hardcoded responses are wrong, and when they are right. To do this we need another system. An independent critic which can “objectively” tell us, counterfactually, whether the brainstem’s system was correct or not. This system, I argue, is the likely origins of the dopaminergic reward system of the basal ganglia. The goal here, is to essentially determine, after the fact, whether the hardwired response of the brainstem was correct or not, and forward that information to the association areas (here just the amygdala and related circuitry) so that it can learn to trigger survival reflexes independently of the hardcoded brainstem responses.

How do we construct such a critic? The fundamental thing we need to do is construct some measure of success independently of the brainstem, yet which is still objective enough that optimizing it reliably leads to adaptive decisions. Although we cannot directly measure and optimize genetic fitness, as evolution would like, we have a set of pretty good proxies available – our physiological state. The brainstem is also responsible for representing and controlling the physiological state of the organism. Various physiological parameters such as breathing, glucose level, hormone levels etc are controlled around a set of evolutionarily programmed desired set-points, where the control apparatus orchestrating this lives in the brainstem and midbrain (hypothalamus). These physiological states can also be used to modulate brainstem reflexes directly. If hungry, then we should ignore danger signals to move towards food, but not do so if already satiated. These kinds of basic behavioural modulation can be implemented directly in the brainstem.

Importantly, what this physiological state information gives us is an objective measure of performance independent of the specific brainstem reflexes. While not ideal as a proxy, physiological state serves as a lower bound on genetic fitness: if our physiological state departs too far from homeostasis, then we die and our genetic fitness isn’t looking all that great. To construct a critic, all we need to do is pipe this physiological state information, specifically the degree of divergence from homeostatic equilibrium, into one end of the critic – specifically it forms our reward function. If we are close to homeostasis, we receive rewards. If we are far away, we receive negative punishment. The other input to the critic has to be the state information we wish to score. If we give the critic midbrain feature inputs, we can compute the expected long term reward associated with specific sensory features. If we input the brainstem reflex actions, we can estimate their long term value also. The critic can be trained with an incredibly simple RL algorithm like temporal difference learning, which only needs a single recurrent loop between a set of inputs and a set of neurons which represent reward prediction error, alongside Hebbian learning on a linear value estimator layer.

Crucially, once we have this critic, we can pipe its estimated values back to our association area and use them to drive and modulate responses. If a brainstem reflex is going to be activated by a feature, but we know from the critic that this has low expected value, then we decrease the weights to lower the chance of activation. Conversely if it has high expected value. We essentially have converted our agent into a model-free RL agent where the action space is the brainstem reflexes controllable by the association area.

The fundamental benefit of this independent critic system is that it allows us to start learning about the contingencies of reward in the environment and actually start proactively optimizing objectives instead of just being reactive. This point is the fundamental origin of agentic behaviour. While initially only serving as a critic for brainstem reflexes, this extra system develops its own set of machinery for predicting and understanding reward in the environment, and, by interfacing directly with the brainstem itself, it can begin to make actions in a goal-directed way which it has learnt will receive lots of reward in the future. This allows the learning of complex habits in environments beyond evolutionarily hardcoded stimulus-response patterns. For instance, it allows an animal to go foraging and then return to a specific point (its nest). It allows for the development of temporally extended behaviour – for instance storing food or water resources for consumption later. It can learn to wait to setup ambushes for prey. The schematic of our brain now looks as follows:

By this point we are already getting close to a pretty good brain. We have a system which can parse its sensory input into a set of features, partially learned, partially hardcoded. It can take these features and make associations between them and the survival reflexes hardcoded in the brainstem, allowing it to predict and pre-empt them. It can perform classical and operant conditioning and associate arbitrary conditioned stimuli to primary rewards. Moreover, it has a general reward system built in that allows it to perform simple reinforcement learning, which, if the reinforcement learning system is hooked up directly to brainstem reflexes and action selection mechanisms, allows the emergence of agentic longer-term behaviour.

This system already possesses the agentic and survival related `core’ of a general intelligence. The only real challenge here is scalability. This agent is reliant entirely on simple model-free reinforcement learning to choose actions, and simple stimulus-response associations to trigger brainstem survival reflexes. The sensory input the critic or association areas receive is mostly in the form of preset feature detectors, with some basic learned representations as well. The critic also receives complex physiological state knowledge from the brainstem and can include these in its reward function.

In a real brain, of course, by this point there is quite a complicated infrastructure around these basic tasks. For example, brains often split aversive and appetitive rewards and stimuli and processes them differently. Typically, there are alsospecialized brain regions to handle complex array of physiological states that need balancing and a complex method to allow these states to influence decision making in a flexible manner. Nevertheless, this is the basic schematic “API” of core functions of the brain and it suffices to develop a creature capable of flexible adaptive behaviour.

Now the problem is scalability. Animals which could extract more complex or more abstract pattern from their sensory data, or animals that can plan longer in the future, or animals that, through sexual selection, need to produce increasingly complex patterns such as birdsong, will be at an evolutionary advantage. These capabilities require a very high degree of within-lifetime learning. They are too complex and context-dependent to ever be specified directly in the genome, or even reinforced from base physiological rewards, but instead must be learnt across the lifetime. Although some basic learning capabilities have already been developed, these have been within the limits of an already constructed system. Now, instead, the brain needs a way to learn from scratch. To solve this problem, we (evolution) invent the cortex (pallium in birds). Cortex provides the neural substrate for a fully general learning mechanism, capable of extracting and learning arbitrary patterns from data. Cortex starts out un-initialized, with random connections providing useless information. Only during the animals lifetime does it learn and start becoming useful. This also means cortex can only evolve when the animals themselves and the multi-agent world they inhabit becomes sufficiently complex, and their lifespans become sufficiently long, that paying upfront costs for this generic learning algorithm becomes worthwhile.

The cortex also fully exploits a relatively new principle unsupervised predictive learning. Previously the brain had relied on direct supervisory signals from either the hardcoded reflexes of the brainstem, or else supervisory primary reward information. The cortex does not use this information, except as an additional modulatory signal, but instead develops its representations by learning to predict its incoming sense data over various timescales. This predictive learning provides a dense and rich supervisory signal which allows the cortex to expand and scale to make the most use of all its incoming data. However, the addition of cortex provides the brain another challenge of integration. While the cortex performs its own predictive learning algorithm, its outputs are extremely dense high dimensinoal embedding vectors, which are not immediately interpretable to the rest of the brain. However, the brain has already dealt with this problem once before, when it had feature vectors coming from the sensory midbrain. The solution is an association area which learns to connect these embedding vectors with survival relevant activations in the brainstem. Importantly, unlike the original case, similar association areas already exist in the mid-brain and we don’t need to reinvent the wheel. Instead, we simply add the new cortical inputs to the existing association area and use the same Hebbian trick to learn the right associations between (now) cortical inputs and brainstem reflexes (arguably the cortex also develops its own association area – the thalamus, although I’m not clear on the evolutionary timeline of this).

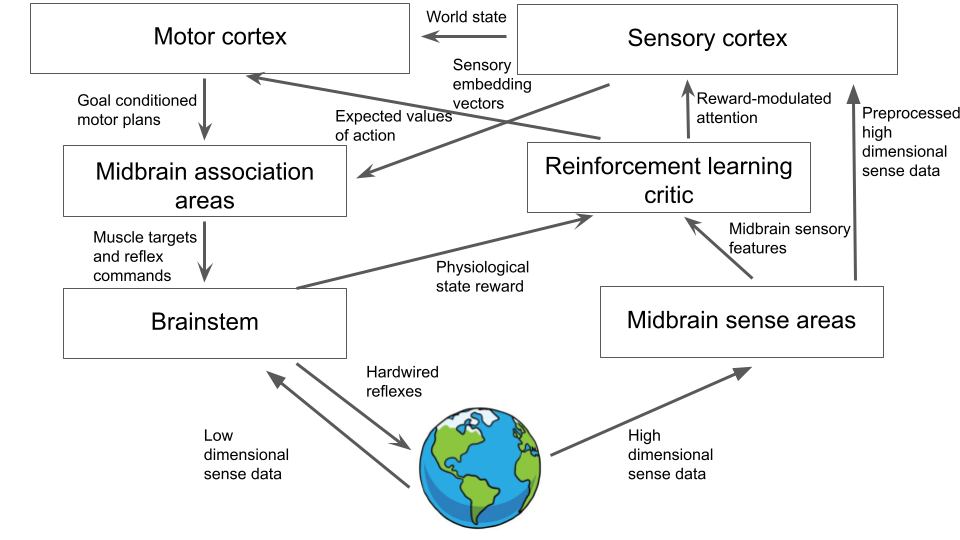

This means that, even though cortex is a tremendous invention in capabilities, in terms of the brain API, it interfaces extremely simply with the agentic core. Namely, there are two interfaces where sense data enters the core. Firstly, in the amygdala or reflex association area, processed sensations in the form of features are associated with brainstem reflexes to enable classical conditioning. Here, we simply add another input to the amygdala region so that it now performs a three-way correlation, between high-level cortical embeddings, between mid-brain feature vectors, and between brainsteam activations. Similarly, the connections in the amygdala inhibiting or activating brainstem responses don’t need to change at all. They just need to also be able to be triggered by cortical inputs (at this point, it is possible that the mid-brain sensory inputs become redundant since the cortex performs their function better. The end result tends to be either that the midbrain sensory regions become instead just a preprocessing step to the cortex, as in audition, or alternatively that they form a fast direct path detecting hardcoded survival-relevant templates, as in vision.).

Similarly, for reinforcement learning, we can use the same algorithms as before, merely update our value function estimators and reward estimators – the dopaminergic neurons in VTA – to take cortical embeddings as input. And then flexibly learn how these correspond to reward using the existing circuitry. This means that the midbrain becomes the interface between cortex and brainstem. Importantly, the brainstem never needs to even know that there is a cortex at all. Since the cortex is fully encapsulated and interacts with the agentic core only through two connections to the midbrain, it means that cortex can scale indefinitely without needing singificant architectural rewiring. Effectively, adding more cortex just means that the embedding vectors are better. Evolution, if it needs to increase the `intelligence’ of the animal can just add more cortex.

Ultimately, cortex will develop a complex hierarchy of layers and regions, and a general hub – the thalamus – which largely controls its interfaces with the midbrain regions as well as a set of motor control regions which in humans and primates have largely supplanted the original midbrain and brainstem APIs. But nevertheless, the basic modular structure remains the same. Cortex interfaces with the brainstem through two methods. Firstly, an associative approach based around the amygdala, but including the habenula, the periacqueductal grey, and similar regionis which control the brainstems reactions to stimuli, and are behind conditioning behaviour, and secondly thruogh the bascal ganglia circuits which control model-free reinforcement learning and allow for the development of agentic behaviour based around maximizing rewards. These mid-brain regions then control the `drivers’ which allow for direct interaction with the brainstem which controls most basic survival relevant functionality. These three levels also progress through a hierarchy of learning capabilities. The brainstem performs relatively little learning itself but contains hardcoded survival instincts. The midbrain learns merely to associate existing feature vectors with brainstem responses via Hebbian plasticity in the case of the amygdala, and performs dopaminergic-based temporal difference learning in the basal ganglia. The cortex, on the other hand, learns everything from scratch through self-supervised predictive learning, and then passes these learnt embeddings of its input through to the midbrain.

A similar process is taken for motor control. While currently we have just assumed that all motor outputs are brainstem reflexes. These have nowhere near the complexity and flexibility to underlie behaviour in any complex organism. Instead, even for simple organisms we have specialized midbrain motor nuclei which receive reward and action information directly from the critic and output motor plans which are then sent to the brainstem. These essentially function much like the sensory association areas in that they provide an interface between critic and brainstem (in this case not reflexes but the spinal cord nerves directly). Cortex again just provides an additional layer on top of this system. Now we have a motor cortex which, when hooked up to the reward signal, can output its own goal conditioned motor trajectories which are transmitted down to the midbrain motor ‘association areas’ for eventual implementation in the spinal cord.

Our final brain architecture now looks like this:

And can instantiate which, thanks to the cortex, can learn and maintain extremely complex world models of sensory stimuli, and perform flexible planning to obtain goal conditioned trajectories for action within them. By and by large, this is the abstract architecture which is found in the mammalian brain and therefore undergirds our own general intelligence. We have come a long way from a simple evolutionarily hardcoded stimulus-response architecture. But nevertheless, at each step, while we have added complexity, we have created a functional and useful system. Moreover, this necessity of being functional at all times has effectively forced modularity and extensibility into the design of the brain – properties which help us immensely as neuroscientists trying to understand the details of how each part works.

Further questions and untied threads

1.) The cerebellum. This is an evolutionarily ancient structure but I still don’t really understand what it’s good for, or how it fits into the API. It appears to do something with supervised learning, and perhaps amortized learning of motor commands – i.e. cleaning up noisy motor commands. But why? There is also intriguing evidence that it has a much wider cognitive functions, althoguh precisely what it does is unclear.

2.) How more advanced cognitive abilities such as planning and long-term memory fit into the API. Currently cortex is thought of as essentially a more advanced sensory system which just produces embedding vectors but beyond this the cortex also is vital for advanced capabilitie like long term planning and memory as well as metacognition etc. How do these interface with the midbrain API, if indeed they do at all? Is there are `lower level cortex’ which provides essentially mid-brain drivers that these higher level capabilities then use to communicate with the midbrain, resulting in essentially a four-tiered architecture instead of a three-tiered one? With two tiers of cortex? Currently, we have motor cortex producing ‘goal conditioned action plans’, how exactly does this work?

3.) How does credit assignment work in this system and especially between the cortical and midbrain regions. Unlike the cortex, which is physiologically uniform, it seems unlikely that something like backprop can pass through the entire system down to the midbrain. This is especially important given that the role of the midbrain, to essentially serve as the routing layer between cortex and brainstem, is absolutely critical for any kind of useful behaviour, and that its core function is learning to interpret cortical activity patterns and translating them into brainstem reflexes.

4.) Why is there so much heterogeneity in the midbrain and brainstem? Unlike cortex which is highly uniform, the brainstem and midbrain have many different regionsand often loads of random small nuclei each specialized in one particualr function. The integration and wiring of such a mess together would be a huge problem. Similarly for the midbrain regions which have to learn to route this information to the correct destination? Why is there such hetergeneity at the low levels of the brain and less, it seems, at higher levels?